#-Python and Active Directory Integration Services

Explore tagged Tumblr posts

Text

Launch Your Business Online: Build Your Company Website in Django 5 and Deploy It on cPanel

If you’re dreaming of a professional company website that reflects your brand, boosts your credibility, and grows with your business—this might just be the breakthrough you need. Gone are the days when building a website meant hiring expensive developers or navigating complex codebases. With Django 5 and an easy cPanel deployment, you can take control of your company’s digital presence from start to finish—even if you’re just starting out.

So what’s the smartest way to go about it?

Let’s walk you through how you can Build Your Company Website in Django 5 | Deploy it on CPANEL and make your business thrive online.

Why Django 5 is the Perfect Framework for Business Websites

When it comes to building robust, secure, and scalable websites, Django has long been a favorite among developers. But now, with Django 5, it's better than ever—faster, cleaner, and packed with features tailored to modern web development.

Here’s why Django 5 stands out for building company websites:

✅ Built-in Security Features

Security isn't an afterthought in Django—it's baked right in. Django 5 offers strong protections against threats like SQL injection, cross-site scripting, and CSRF attacks. When you’re handling sensitive business data, this level of security is crucial.

✅ Scalable Architecture

Whether you're starting a small business website or planning for high traffic in the future, Django's modular design lets you scale as needed.

✅ SEO-Friendly URLs and Clean Code

Search engine visibility starts with clean, semantic URLs. Django's powerful routing system helps you build readable, indexable URLs for better rankings.

✅ Built for Rapid Development

Get your website up and running quickly with Django’s reusable components and rapid development capabilities. That means you spend less time on technical hurdles and more time growing your business.

What is cPanel – And Why Use It for Deployment?

If Django is your engine, cPanel is your garage.

cPanel is a web hosting control panel that simplifies the process of managing your website. It offers an intuitive dashboard that lets you handle everything from file management and database creation to email accounts and security settings.

Why choose cPanel for deployment?

User-Friendly Interface: Even non-tech users can manage hosting tasks with ease.

Integrated Tools: Databases, domains, SSL certificates—all managed from one place.

Widespread Availability: Most popular web hosting services offer cPanel, making it an accessible choice for businesses everywhere.

Supports Python Apps: With the right hosting plan, you can deploy Django apps smoothly using the Python App interface.

Let’s Talk Strategy – What Makes a Good Business Website?

Before jumping into development, it’s smart to define what your company website needs to do. Your website isn’t just a digital flyer—it’s your 24/7 salesperson, customer service rep, and branding platform all rolled into one.

A successful business website should:

Clearly state what your business does

Showcase your services or products

Include trust-builders like testimonials, certifications, or case studies

Offer ways for visitors to contact you

Be mobile-friendly and fast-loading

Use modern design and UX principles

With Django 5, all of these can be implemented beautifully, thanks to its flexibility and integration with frontend technologies.

Building Your Company Website in Django 5: A Step-by-Step Overview

Here’s an overview of how you’ll go from idea to live website using Django 5:

1. Set Up Your Development Environment

Start by installing Python, Django 5, and setting up your project directory. Use virtual environments to manage dependencies effectively.

bashCopy

Edit

python -m venv env source env/bin/activate pip install django django-admin startproject companysite

2. Create Your Core Pages

Design the main structure of your site:

Home

About Us

Services / Products

Contact Page

Blog or Updates section

Use Django views, models, and templates to create these pages. You can use the built-in admin dashboard to manage your content.

3. Add Dynamic Features

Integrate forms for contact inquiries, user sign-ups, or newsletter subscriptions. Django’s form handling system makes this a breeze.

4. Optimize for SEO and Performance

Include metadata, image alt tags, clean URLs, and readable content. Django lets you define custom URLs and templates that search engines love.

5. Test on Localhost

Before deploying, test everything on your local server. Make sure all links work, forms submit properly, and there are no error messages.

Deployment Time: How to Host Django 5 on cPanel

Deployment can often be the most intimidating part—but not anymore.

Here’s a simplified breakdown of how to deploy your Django website on cPanel:

✅ Step 1: Choose a cPanel Host with Python Support

Not all hosting providers offer Python or Django support. Choose one that offers SSH access and Python App management in cPanel (many VPS or cloud hosting options offer this).

✅ Step 2: Set Up Your Python App in cPanel

Log in to cPanel and use the “Setup Python App” feature:

Select Python version (compatible with Django 5)

Set your app directory

Configure your virtual environment and add requirements.txt

✅ Step 3: Upload Your Project Files

You can upload your files via:

File Manager in cPanel

FTP client like FileZilla

Git (some cPanel providers allow Git integration)

✅ Step 4: Configure WSGI

Edit the passenger_wsgi.py file with the correct WSGI application path.

Example:

pythonCopy

Edit

import sys sys.path.insert(0, '/home/yourusername/projectname') from projectname.wsgi import application

✅ Step 5: Connect Your Database

Create a MySQL/PostgreSQL database through cPanel and update your Django settings.py with the database credentials.

✅ Step 6: Apply Migrations and Collect Static Files

SSH into your cPanel account and run:

bashCopy

Edit

python manage.py migrate python manage.py collectstatic

✅ Step 7: Set Environment Variables

Set Django environment variables like DJANGO_SETTINGS_MODULE and SECRET_KEY through the Python App setup panel or .env file.

And voilà—your site is live!

Don’t Want to Start From Scratch? Learn From a Complete Guide

If you want to avoid the learning curve and skip directly to building and deploying, there’s a smarter way.

We highly recommend you check out this all-in-one course: 👉 Build Your Company Website in Django 5 | Deploy it on CPANEL

This course walks you through the entire process��from creating a professional-grade website using Django 5 to deploying it on cPanel like a pro. It’s designed for beginners and entrepreneurs who want real results without coding headaches.

What you’ll learn:

Django 5 fundamentals with real-world examples

How to design a complete business website

Step-by-step cPanel deployment process

Debugging and troubleshooting tips

Bonus: SEO and optimization tricks included!

Pro Tips for a Business-Boosting Website

Even with a great framework and deployment, there are still a few best practices that can elevate your online presence:

💡 Use High-Quality Visuals

Images can make or break your first impression. Use clean, brand-aligned visuals, and don’t forget to compress them for faster loading times.

💡 Write Copy That Converts

Your website copy should sound human, helpful, and persuasive. Think: how would you talk to a customer in person?

💡 Add a Blog Section

A blog helps with SEO and gives your brand a voice. Write about industry trends, how-tos, or product updates.

💡 Install an SSL Certificate

Most cPanel hosts provide free SSL via Let's Encrypt. Activate it to boost security and trust.

💡 Optimize for Mobile

Make sure your layout and fonts adapt smoothly to phones and tablets. Google prioritizes mobile-first indexing.

Conclusion: Your Online Journey Starts Here

Building a company website in Django 5 and deploying it on cPanel isn’t just a technical project—it’s a growth opportunity. You’re laying the foundation for your brand, your credibility, and your online income.

The beauty of Django 5 is that it balances power with simplicity. Combine that with cPanel’s user-friendly deployment process, and you have a complete toolkit to create something meaningful, fast.

And you don’t have to figure it all out alone.

Get guided support and professional walkthroughs in this step-by-step course 👉 Build Your Company Website in Django 5 | Deploy it on CPANEL

Whether you're a small business owner, freelancer, or aspiring developer—this is your blueprint to an impressive online presence. So go ahead, start building something awesome today.

0 notes

Text

Introduction to Microsoft Azure Basics: A Beginner's Guide

Cloud computing has revolutionized the way businesses are run, facilitating flexibility, scalability, and innovation like never before. One of the leading cloud providers, Microsoft Azure, is a robust platform with an unparalleled set of services that cover from virtual machines and AI to database management and cybersecurity. Be it a developer, IT expert, or an interested individual curious about the cloud, getting a hold of Azure fundamentals can be your gateway into an exciting and future-proof arena. In this beginner's tutorial, we'll learn what Azure is, its fundamental concepts, and the most important services you should know to begin your cloud journey. What is Microsoft Azure? Microsoft Azure is a cloud computing service and platform that has been developed by Microsoft. It delivers many cloud-based services such as computing, analytics, storage, networking, and other services. These services are made available by selecting and using those for the purpose of building new applications as well as running already existing applications. Launched in 2010, Azure has developed at a lightning pace and now caters to hybrid cloud environments, artificial intelligence, and DevOps, becoming the go-to choice for both enterprises and startups.

Why Learn Azure? • Market Demand: Azure skills are in demand because enterprises use it heavily. • Career Growth: Azure knowledge and certifications can be a stepping stone to becoming a cloud engineer, solutions architect, or DevOps engineer. • Scalability & Flexibility: Solutions from Azure can be offered to businesses of all types, ranging from startups to Fortune 500. • Integration with Microsoft Tools: Smooth integration with offerings such as Office 365, Active Directory, and Windows Server. Fundamental Concepts of Microsoft Azure Prior to services, it would be recommended to familiarize oneself with certain critical concepts which constitute the crux of Azure.

1. Azure Regions and Availability Zones Azure can be had in every geographic area globally, regions being divided within them. Within regions, redundancy and resiliency can be had through availability zones—separate physical data centers within a region. 2. Resource Groups A resource group is a container holding Azure resources that belong together, such as virtual machines, databases, and storage accounts. It helps group and manage assets of the same lifecycle.

3. Azure Resource Manager (ARM) ARM is Azure's deployment and management service. It enables you to manage resources through templates, REST APIs, or command-line tools in a uniform way. 4. Pay-As-You-Go Model Azure has a pay-as-you-go pricing model, meaning you pay only for what you use. It also has reserved instances and spot pricing to optimize costs.

Top Azure Services That Every Beginner Should Know Azure has over 200 services. As a starter, note the most significant ones by categories like compute, storage, networking, and databases. 1. Azure Virtual Machines (VMs) Azure VMs are flexible compute resources that allow you to run Windows- or Linux-based workloads. It's essentially a computer in the cloud. Use Cases: • Hosting applications • Executing development environments • Executing legacy applications

2. Azure App Services It's a fully managed service for constructing, running, and scaling web applications and APIs. Why Use It? • Automatically scales up or down according to demand as well as remains always available • Multilanguage support (.NET, Java, Node.js, Python) • Bundled DevOps and CI/CD 3. Azure Blob Storage Blob (Binary Large Object) storage is appropriate to store unstructured data such as images, videos, and backups. Key Features: • Greatly scalable and secure • Allows data lifecycle management • Accessible using REST API

4. Azure SQL Database This is a managed relational database service on Microsoft SQL Server. Benefits: • Automatic updates and backups • Embedded high availability • Has hyperscale and serverless levels 5. Azure Functions It is a serverless computing service that runs your code in response to events. Example Use Cases: • Workflow automation • Parsing file uploads • Handling HTTP requests 6. Azure Virtual Network (VNet) A VNet is like a normal network in your on-premises environment, but it exists in Azure. Applications: • Secure communication among resources • VPN and ExpressRoute connectivity

• Subnet segmentation for better control

Getting Started with Azure 1. Create an Azure Account Start with a free Azure account with $200 credit for the initial 30 days and 12 months of free-tier services. 2. Discover the Azure Portal The Azure Portal is a web-based interface in which you can create, configure, and manage Azure resources using a graphical interface. 3. Use Azure CLI or PowerShell For command-line fans, Azure CLI and Azure PowerShell enable you to work with resources programmatically. 4. Learn with Microsoft Learn Microsoft Learn also offers interactive, role-based learning paths tailored to new users. Major Azure Management Tools Acquiring the following tools will improve your resource management ability: Azure Monitor A telemetry data gathering, analysis, and action capability for your Azure infrastructure. Azure Advisor Offers customized best practice advice to enhance performance, availability, and cost-effectiveness. Azure Cost Management + Billing Assists in tracking costs and projects costs in advance to remain within budget.

Security and Identity in Azure Azure focuses a great deal of security and compliance. 1. Azure Active Directory (Azure AD) A cloud identity and access management. You apply it to manage identities and access levels of users for Azure services. 2. Role-Based Access Control (RBAC) Allows you to define permissions for users, groups, and applications to certain resources. 3. Azure Key Vault Applied to securely store keys, secrets, and certificates.

Best Practices for Azure Beginners • Start Small: Start with straightforward services like Virtual Machines, Blob Storage, and Azure SQL Database. • Tagging: Employ metadata tags on resources for enhanced organization and cost monitoring. • Monitor Early: Use Azure Monitor and Alerts to track performance and anomalies. • Secure Early: Implement firewalls, RBAC, and encryption from the early stages. • Automate: Explore automation via Azure Logic Apps, ARM templates, and Azure DevOps.

Common Errors to Prevent • Handling cost management and overprovisioning resources lightly. • Not backing up important data. • Not implementing adequate monitoring or alerting. • Granting excessive permissions in Azure AD. • Utilizing default settings without considering security implications. Conclusion

Microsoft Azure is a strong, generic platform for supporting a large variety of usage scenarios—everything from a small web hosting infrastructure to a highly sophisticated enterprise solution. The key to successful cloud computing, however, is an understanding of the basics—ground-level concepts, primitive services, and management tools—is well-served by knowledge gained here. And that's just the start. At some point on your journey, you'll come across more complex tools and capabilities, but from a strong base, it is the secret to being able to work your way through Azure in confidence. Go ahead and claim your free account, begin trying things out, and join the cloud revolution now.

Website: https://www.icertglobal.com/course/developing-microsoft-azure-solutions-70-532-certification-training/Classroom/80/3395

0 notes

Text

Unlocking the Power of Microsoft 365 with Microsoft Graph API

In today’s cloud-driven world, businesses rely heavily on productivity tools like Microsoft 365. From Outlook and OneDrive to Teams and SharePoint, these services generate and manage a vast amount of data. But how do developers tap into this ecosystem to build intelligent, integrated solutions? Enter Microsoft Graph API — Microsoft’s unified API endpoint that enables you to access data across its suite of services.

What is Microsoft Graph API?

Microsoft Graph API is a RESTful web API that allows developers to interact with the data of millions of users in Microsoft 365. Whether it’s retrieving calendar events, accessing user profiles, sending emails, or managing documents in OneDrive, Graph API provides a single endpoint to connect it all.

Azure Active Directory

Outlook (Mail, Calendar, Contacts)

Teams

SharePoint

OneDrive

Excel

Planner

To Do

This unified approach simplifies authentication, query syntax, and data access across services.

Key Features

Single Authentication Flow: Using Microsoft Identity Platform, you can authenticate once and gain access to all services under Microsoft Graph.

Deep Integration with Microsoft 365: You can build apps that deeply integrate with the Office ecosystem — for example, a chatbot that reads Teams messages or a dashboard displaying user analytics.

Webhooks & Real-Time Data: Graph API supports webhooks, enabling apps to subscribe to changes in real time (e.g., receive notifications when a new file is uploaded to OneDrive).

Rich Data Access: Gain insights with advanced queries using OData protocol, including filtering, searching, and ordering data.

Extensible Schema: Microsoft Graph lets you extend directory schema for custom applications.

Common Use Cases

Custom Dashboards: Display user metrics like email traffic, document sharing activity, or meetings analytics.

Workplace Automation: Create workflows triggered by calendar events or file uploads.

Team Collaboration Apps: Enhance Microsoft Teams with bots or tabs that use Graph API to fetch user or channel data.

Security & Compliance: Monitor user sign-ins, audit logs, or suspicious activity.

Authentication & Permissions

To use Graph API, your application must be registered in Azure Active Directory. After registration, you can request scopes like User Read, Mail Read, or Files ReadWrite. Microsoft enforces strict permission models to ensure data privacy and control.

Getting Started

Register your app in Azure Portal.

Choose appropriate Microsoft Graph permissions.

Obtain OAuth 2.0 access token.

Call Graph API endpoints using HTTP or SDKs (available for .NET, JavaScript, Python, and more).

Learn More About Our Microsoft Graph API

Microsoft Graph API is a powerful tool that connects you to the heart of Microsoft 365. Whether you’re building enterprise dashboards, automation scripts, or intelligent assistants, Graph API opens the door to endless possibilities. With just a few lines of code, you can tap into the workflows of millions and bring innovation right into the productivity stack.

0 notes

Text

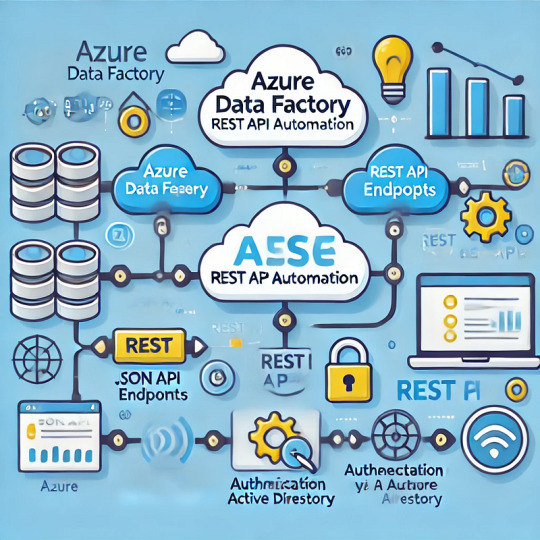

Introduction to Azure Data Factory's REST API: Automating Data Pipelines

1. Overview of Azure Data Factory REST API

Azure Data Factory (ADF) provides a RESTful API that allows users to automate and manage data pipelines programmatically. The API supports various operations such as:

Creating, updating, and deleting pipelines

Triggering pipeline runs

Monitoring pipeline execution

Managing linked services and datasets

By leveraging the REST API, organizations can integrate ADF with CI/CD pipelines, automate workflows, and enhance overall data operations.

2. Authenticating with Azure Data Factory REST API

Before making API calls, authentication is required using Azure Active Directory (Azure AD). The process involves obtaining an OAuth 2.0 token.

Steps to Get an Authentication Token

Register an Azure AD App in the Azure Portal.

Assign permissions to allow the app to interact with ADF.

Use a service principal to authenticate and generate an access token.

Here’s a Python script to obtain the OAuth 2.0 token:pythonimport requestsTENANT_ID = "your-tenant-id" CLIENT_ID = "your-client-id" CLIENT_SECRET = "your-client-secret" RESOURCE = "https://management.azure.com/"AUTH_URL = f"https://login.microsoftonline.com/{TENANT_ID}/oauth2/token"data = { "grant_type": "client_credentials", "client_id": CLIENT_ID, "client_secret": CLIENT_SECRET, "resource": RESOURCE, }response = requests.post(AUTH_URL, data=data) token = response.json().get("access_token")print("Access Token:", token)

3. Triggering an Azure Data Factory Pipeline using REST API

Once authenticated, you can trigger a pipeline execution using the API.

API Endpoint

bashPOST https://management.azure.com/subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName}/providers/Microsoft.DataFactory/factories/{factoryName}/pipelines/{pipelineName}/createRun?api-version=2018-06-01

Python Example: Triggering a Pipeline

pythonimport requestsSUBSCRIPTION_ID = "your-subscription-id" RESOURCE_GROUP = "your-resource-group" FACTORY_NAME = "your-adf-factory" PIPELINE_NAME = "your-pipeline-name" API_VERSION = "2018-06-01"URL = f"https://management.azure.com/subscriptions/{SUBSCRIPTION_ID}/resourceGroups/{RESOURCE_GROUP}/providers/Microsoft.DataFactory/factories/{FACTORY_NAME}/pipelines/{PIPELINE_NAME}/createRun?api-version={API_VERSION}"headers = { "Authorization": f"Bearer {token}", "Content-Type": "application/json" }response = requests.post(URL, headers=headers) print("Pipeline Trigger Response:", response.json())

4. Monitoring Pipeline Runs using REST API

After triggering a pipeline, you might want to check its status. The following API call retrieves the status of a pipeline run:

API Endpoint

bashCopyEditGET https://management.azure.com/subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName}/providers/Microsoft.DataFactory/factories/{factoryName}/pipelineruns/{runId}?api-version=2018-06-01

Python Example: Checking Pipeline Run Status

pythonCopyEditRUN_ID = "your-pipeline-run-id"URL = f"https://management.azure.com/subscriptions/{SUBSCRIPTION_ID}/resourceGroups/{RESOURCE_GROUP}/providers/Microsoft.DataFactory/factories/{FACTORY_NAME}/pipelineruns/{RUN_ID}?api-version={API_VERSION}"response = requests.get(URL, headers=headers) print("Pipeline Run Status:", response.json())

5. Automating Pipeline Execution with a Scheduler

To automate pipeline execution at regular intervals, you can use:

Azure Logic Apps

Azure Functions

A simple Python script with a scheduler (e.g., cron jobs or Windows Task Scheduler)

Here’s an example using Python’s schedule module`:pythonimport schedule import timedef run_pipeline(): response = requests.post(URL, headers=headers) print("Pipeline Triggered:", response.json())schedule.every().day.at("08:00").do(run_pipeline)while True: schedule.run_pending() time.sleep(60)

6. Conclusion

The Azure Data Factory REST API provides a powerful way to automate data workflows. By leveraging the API, you can programmatically trigger pipelines, monitor executions, and integrate ADF with other cloud services. Whether you’re managing data ingestion, transformation, or orchestration, using the REST API ensures efficient and scalable automation.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Which cloud service is the best, AWS or Microsoft Azure?

The choice between AWS (Amazon Web Services) and Microsoft Azure depends on various factors, including your specific needs, budget, and technical expertise. Here’s a comparison based on key aspects:

1. Market Share & Popularity

AWS: The largest cloud service provider, holding the biggest market share.

Azure: Second-largest, but growing quickly, especially with enterprise customers using Microsoft products.

2. Services & Features

AWS: Offers a vast range of services (compute, storage, AI, analytics, security, etc.). It’s known for its maturity, reliability, and extensive documentation.

Azure: Strong in enterprise solutions, especially for companies already using Windows Server, Active Directory, and Microsoft 365.

3. Pricing & Cost

AWS: Flexible pricing models with a pay-as-you-go approach. It can be cost-effective but might require optimization.

Azure: Competitive pricing, especially for Microsoft-based businesses (discounts on Windows-based services).

4. Performance & Reliability

AWS: Offers global coverage with more data centers and better uptime in most cases.

Azure: Also reliable, but some users report occasional downtimes and service inconsistencies.

5. Ease of Use

AWS: Steeper learning curve but excellent documentation and community support.

Azure: More user-friendly for businesses already familiar with Microsoft products.

6. Security & Compliance

Both AWS and Azure offer high security and compliance standards (HIPAA, GDPR, ISO, etc.), but Azure is often preferred by enterprises needing deep Microsoft integration.

Which One Should You Choose?

Go for AWS if you need the best cloud performance, flexibility, and a wide range of services.

Choose Azure if your company already relies on Microsoft products and wants seamless integration.

If you’re learning cloud computing, AWS is a great starting point because of its dominance in the market. However, knowing both AWS and Azure can be beneficial for career growth! 🚀

Scope @ NareshIT:

At NareshIT’s Python application Development program you will be able to get the extensive hands-on training in front-end, middleware, and back-end technology.

It skilled you along with phase-end and capstone projects based on real business scenarios.

Here you learn the concepts from leading industry experts with content structured to ensure industrial relevance.

An end-to-end application with exciting features

For more details visit:

0 notes

Text

Azure Databricks: Unleashing the Power of Big Data and AI

Introduction to Azure Databricks

In a world where data is considered the new oil, managing and analyzing vast amounts of information is critical. Enter Azure Databricks, a unified analytics platform designed to simplify big data and artificial intelligence (AI) workflows. Developed in partnership between Microsoft and Databricks, this tool is transforming how businesses leverage data to make smarter decisions.

Azure Databricks combines the power of Apache Spark with Azure’s robust ecosystem, making it an essential resource for businesses aiming to harness the potential of data and AI.

Core Features of Azure Databricks

Unified Analytics Platform

Azure Databricks brings together data engineering, data science, and business analytics in one environment. It supports end-to-end workflows, from data ingestion to model deployment.

Support for Multiple Languages

Whether you’re proficient in Python, SQL, Scala, R, or Java, Azure Databricks has you covered. Its flexibility makes it a preferred choice for diverse teams.

Seamless Integration with Azure Services

Azure Databricks integrates effortlessly with Azure’s suite of services, including Azure Data Lake, Azure Synapse Analytics, and Power BI, streamlining data pipelines and analysis.

How Azure Databricks Works

Architecture Overview

At its core, Azure Databricks leverages Apache Spark’s distributed computing capabilities. This ensures high-speed data processing and scalability.

Collaboration in a Shared Workspace

Teams can collaborate in real-time using shared notebooks, fostering a culture of innovation and efficiency.

Automated Cluster Management

Azure Databricks simplifies cluster creation and management, allowing users to focus on analytics rather than infrastructure.

Advantages of Using Azure Databricks

Scalability and Flexibility

Azure Databricks automatically scales resources based on workload requirements, ensuring optimal performance.

Cost Efficiency

Pay-as-you-go pricing and resource optimization help businesses save on operational costs.

Enterprise-Grade Security

With features like role-based access control (RBAC) and integration with Azure Active Directory, Azure Databricks ensures data security and compliance.

Comparing Azure Databricks with Other Platforms

Azure Databricks vs. Apache Spark

While Apache Spark is the foundation, Azure Databricks enhances it with a user-friendly interface, better integration, and managed services.

Azure Databricks vs. AWS Glue

Azure Databricks offers superior performance and scalability for machine learning workloads compared to AWS Glue, which is primarily an ETL service.

Key Use Cases for Azure Databricks

Data Engineering and ETL Processes

Azure Databricks simplifies Extract, Transform, Load (ETL) processes, enabling businesses to cleanse and prepare data efficiently.

Machine Learning Model Development

Data scientists can use Azure Databricks to train, test, and deploy machine learning models with ease.

Real-Time Analytics

From monitoring social media trends to analyzing IoT data, Azure Databricks supports real-time analytics for actionable insights.

Industries Benefiting from Azure Databricks

Healthcare

By enabling predictive analytics, Azure Databricks helps healthcare providers improve patient outcomes and optimize operations.

Retail and E-Commerce

Retailers leverage Azure Databricks for demand forecasting, customer segmentation, and personalized marketing.

Financial Services

Banks and financial institutions use Azure Databricks for fraud detection, risk assessment, and portfolio optimization.

Getting Started with Azure Databricks

Setting Up an Azure Databricks Workspace

Begin by creating an Azure Databricks workspace through the Azure portal. This serves as the foundation for your analytics projects.

Creating Clusters

Clusters are the computational backbone. Azure Databricks makes it easy to create and configure clusters tailored to your workload.

Writing and Executing Notebooks

Use notebooks to write, debug, and execute your code. Azure Databricks’ notebook interface is intuitive and collaborative.

Best Practices for Using Azure Databricks

Optimizing Cluster Performance

Select the appropriate cluster size and configurations to balance cost and performance.

Managing Data Storage Effectively

Integrate with Azure Data Lake for efficient and scalable data storage solutions.

Ensuring Data Security and Compliance Implement RBAC, encrypt data at rest, and adhere to industry-specific compliance standards.

Challenges and Solutions in Using Azure Databricks

Managing Costs

Monitor resource usage and terminate idle clusters to avoid unnecessary expenses.

Handling Large Datasets Efficiently

Leverage partitioning and caching to process large datasets effectively.

Debugging and Error Resolution

Azure Databricks provides detailed logs and error reports, simplifying the debugging process.

Future Trends in Azure Databricks

Enhanced AI Capabilities

Expect more advanced AI tools and features to be integrated, empowering businesses to solve complex problems.

Increased Automation

Automation will play a bigger role in streamlining workflows, from data ingestion to model deployment.

Real-Life Success Stories

Case Study: How a Retail Giant Scaled with Azure Databricks

A leading retailer improved inventory management and personalized customer experiences by utilizing Azure Databricks for real-time analytics.

Case Study: Healthcare Advancements with Predictive Analytics

A healthcare provider reduced readmission rates and enhanced patient care through predictive modeling in Azure Databricks.

Learning Resources and Support

Official Microsoft Documentation

Access in-depth guides and tutorials on the Microsoft Azure Databricks documentation.

Online Courses and Certifications

Platforms like Coursera, Udemy, and LinkedIn Learning offer courses to enhance your skills.

Community Forums and Events

Join the Databricks and Azure communities to share knowledge and learn from experts.

Conclusion

Azure Databricks is revolutionizing the way organizations handle big data and AI. Its robust features, seamless integrations, and cost efficiency make it a top choice for businesses of all sizes. Whether you’re looking to improve decision-making, streamline processes, or innovate with AI, Azure Databricks has the tools to help you succeed.

FAQs

1. What is the difference between Azure Databricks and Azure Synapse Analytics?

Azure Databricks focuses on big data analytics and AI, while Azure Synapse Analytics is geared toward data warehousing and business intelligence.

2. Can Azure Databricks handle real-time data processing?

Yes, Azure Databricks supports real-time data processing through its integration with streaming tools like Azure Event Hubs.

3. What skills are needed to work with Azure Databricks?

Knowledge of data engineering, programming languages like Python or Scala, and familiarity with Azure services is beneficial.

4. How secure is Azure Databricks for sensitive data?

Azure Databricks offers enterprise-grade security, including encryption, RBAC, and compliance with standards like GDPR and HIPAA.

5. What is the pricing model for Azure Databricks?

Azure Databricks uses a pay-as-you-go model, with costs based on the compute and storage resources used.

0 notes

Text

How Azure Databricks & Data Factory Aid Modern Data Strategy

For all analytics and AI use cases, maximize data value with Azure Databricks.

What is Azure Databricks?

A completely managed first-party service, Azure Databricks, allows an open data lakehouse in Azure. Build a lakehouse on top of an open data lake to quickly light up analytical workloads and enable data estate governance. Support data science, engineering, machine learning, AI, and SQL-based analytics.

First-party Azure service coupled with additional Azure services and support.

Analytics for your latest, comprehensive data for actionable insights.

A data lakehouse foundation on an open data lake unifies and governs data.

Trustworthy data engineering and large-scale batch and streaming processing.

Get one seamless experience

Microsoft sells and supports Azure Databricks, a fully managed first-party service. Azure Databricks is natively connected with Azure services and starts with a single click in the Azure portal. Without integration, a full variety of analytics and AI use cases may be enabled quickly.

Eliminate data silos and responsibly democratise data to enable scientists, data engineers, and data analysts to collaborate on well-governed datasets.

Use an open and flexible framework

Use an optimised lakehouse architecture on open data lake to process all data types and quickly light up Azure analytics and AI workloads.

Use Apache Spark on Azure Databricks, Azure Synapse Analytics, Azure Machine Learning, and Power BI depending on the workload.

Choose from Python, Scala, R, Java, SQL, TensorFlow, PyTorch, and SciKit Learn data science frameworks and libraries.

Build effective Azure analytics

From the Azure interface, create Apache Spark clusters in minutes.

Photon provides rapid query speed, serverless compute simplifies maintenance, and Delta Live Tables delivers high-quality data with reliable pipelines.

Azure Databricks Architecture

Companies have long collected data from multiple sources, creating data lakes for scale. Quality data was lacking in data lakes. To overcome data warehouse and data lake restrictions, the Lakehouse design arose. Lakehouse, a comprehensive enterprise data infrastructure platform, uses Delta Lake, a popular storage layer. Databricks, a pioneer of the Data Lakehouse, offers Azure Databricks, a fully managed first-party Data and AI solution on Microsoft Azure, making Azure the best cloud for Databricks workloads. This blog article details it’s benefits:

Seamless Azure integration.

Regional performance and availability.

Compliance, security.

Unique Microsoft-Databricks relationship.

1.Seamless Azure integration

Azure Databricks, a first-party service on Microsoft Azure, integrates natively with valuable Azure Services and workloads, enabling speedy onboarding with a few clicks.

Native integration-first-party service

Microsoft Entra ID (previously Azure Active Directory): It seamlessly connects with Microsoft Entra ID for controlled access control and authentication. Instead of building this integration themselves, Microsoft and Databricks engineering teams have natively incorporated it with Azure Databricks.

Azure Data Lake Storage (ADLS Gen2): Databricks can natively read and write data from ADLS Gen2, which has been collaboratively optimised for quick data access, enabling efficient data processing and analytics. Data tasks are simplified by integrating Azure Databricks with Data Lake and Blob Storage.

Azure Monitor and Log Analytics: Azure Monitor and Log Analytics provide insights into it’s clusters and jobs.

The Databricks addon for Visual Studio Code connects the local development environment to Azure Databricks workspace directly.

Integrated, valuable services

Power BI: Power BI offers interactive visualization’s and self-service business insight. All business customers can benefit from it’s performance and technology when used with Power BI. Power BI Desktop connects to Azure Databricks clusters and SQL warehouses. Power BI’s enterprise semantic modelling and calculation features enable customer-relevant computations, hierarchies, and business logic, and Azure Databricks Lakehouse orchestrates data flows into the model.

Publishers can publish Power BI reports to the Power BI service and allow users to access Azure Databricks data using SSO with the same Microsoft Entra ID credentials. Direct Lake mode is a unique feature of Power BI Premium and Microsoft Fabric FSKU (Fabric Capacity/SKU) capacity that works with it. With a Premium Power BI licence, you can Direct Publish from Azure Databricks to create Power BI datasets from Unity Catalogue tables and schemas. Loading parquet-formatted files from a data lake lets it analyse enormous data sets. This capability is beneficial for analysing large models quickly and models with frequent data source updates.

Azure Data Factory (ADF): ADF natively imports data from over 100 sources into Azure. Easy to build, configure, deploy, and monitor in production, it offers graphical data orchestration and monitoring. ADF can execute notebooks, Java Archive file format (JARs), and Python code activities and integrates with Azure Databricks via the linked service to enable scalable data orchestration pipelines that ingest data from various sources and curate it in the Lakehouse.

Azure Open AI: It features AI Functions, a built-in DB SQL function, to access Large Language Models (LLMs) straight from SQL. With this rollout, users can immediately test LLMs on their company data via a familiar SQL interface. A production pipeline can be created rapidly utilising Databricks capabilities like Delta Live Tables or scheduled Jobs after developing the right LLM prompt.

Microsoft Purview: Microsoft Azure’s data governance solution interfaces with Azure Databricks Unity Catalog’s catalogue, lineage, and policy APIs. This lets Microsoft Purview discover and request access while Unity Catalogue remains Azure Databricks’ operational catalogue. Microsoft Purview syncs metadata with it Unity Catalogue, including metastore catalogues, schemas, tables, and views. This connection also discovers Lakehouse data and brings its metadata into Data Map, allowing scanning the Unity Catalogue metastore or selective catalogues. The combination of Microsoft Purview data governance policies with Databricks Unity Catalogue creates a single window for data and analytics governance.

The best of Azure Databricks and Microsoft Fabric

Microsoft Fabric is a complete data and analytics platform for organization’s. It effortlessly integrates Data Engineering, Data Factory, Data Science, Data Warehouse, Real-Time Intelligence, and Power BI on a SaaS foundation. Microsoft Fabric includes OneLake, an open, controlled, unified SaaS data lake for organizational data. Microsoft Fabric creates Delta-Parquet shortcuts to files, folders, and tables in OneLake to simplify data access. These shortcuts allow all Microsoft Fabric engines to act on data without moving or copying it, without disrupting host engine utilization.

Creating a shortcut to Azure Databricks Delta-Lake tables lets clients easily send Lakehouse data to Power BI using Direct Lake mode. Power BI Premium, a core component of Microsoft Fabric, offers Direct Lake mode to serve data directly from OneLake without querying an Azure Databricks Lakehouse or warehouse endpoint, eliminating the need for data duplication or import into a Power BI model and enabling blazing fast performance directly over OneLake data instead of ADLS Gen2. Microsoft Azure clients can use Azure Databricks or Microsoft Fabric, built on the Lakehouse architecture, to maximise their data, unlike other public clouds. With better development pipeline connectivity, Azure Databricks and Microsoft Fabric may simplify organisations’ data journeys.

2.Regional performance and availability

Scalability and performance are strong for Azure Databricks:

Azure Databricks compute optimisation: GPU-enabled instances speed machine learning and deep learning workloads cooperatively optimised by Databricks engineering. Azure Databricks creates about 10 million VMs daily.

Azure Databricks is supported by 43 areas worldwide and expanding.

3.Secure and compliant

Prioritising customer needs, it uses Azure’s enterprise-grade security and compliance:

Azure Security Centre monitors and protects this bricks. Microsoft Azure Security Centre automatically collects, analyses, and integrates log data from several resources. Security Centre displays prioritised security alerts, together with information to swiftly examine and attack remediation options. Data can be encrypted with Azure Databricks.

It workloads fulfil regulatory standards thanks to Azure’s industry-leading compliance certifications. PCI-DSS (Classic) and HIPAA-certified Azure Databricks SQL Serverless, Model Serving.

Only Azure offers Confidential Compute (ACC). End-to-end data encryption is possible with Azure Databricks secret computing. AMD-based Azure Confidential Virtual Machines (VMs) provide comprehensive VM encryption with no performance impact, while Hardware-based Trusted Execution Environments (TEEs) encrypt data in use.

Encryption: Azure Databricks natively supports customer-managed Azure Key Vault and Managed HSM keys. This function enhances encryption security and control.

4.Unusual partnership: Databricks and Microsoft

It’s unique connection with Microsoft is a highlight. Why is it special?

Joint engineering: Databricks and Microsoft create products together for optimal integration and performance. This includes increased Azure Databricks engineering investments and dedicated Microsoft technical resources for resource providers, workspace, and Azure Infra integrations, as well as customer support escalation management.

Operations and support: Azure Databricks, a first-party solution, is only available in the Azure portal, simplifying deployment and management. Microsoft supports this under the same SLAs, security rules, and support contracts as other Azure services, ensuring speedy ticket resolution in coordination with Databricks support teams.

It prices may be managed transparently alongside other Azure services with unified billing.

Go-To-Market and marketing: Events, funding programmes, marketing campaigns, joint customer testimonials, account-planning, and co-marketing, GTM collaboration, and co-sell activities between both organisations improve customer care and support throughout their data journey.

Commercial: Large strategic organization’s select Microsoft for Azure Databricks sales, technical support, and partner enablement. Microsoft offers specialized sales, business development, and planning teams for Azure Databricks to suit all clients’ needs globally.

Use Azure Databricks to enhance productivity

Selecting the correct data analytics platform is critical. Data professionals can boost productivity, cost savings, and ROI with Azure Databricks, a sophisticated data analytics and AI platform, which is well-integrated, maintained, and secure. It is an attractive option for organisations seeking efficiency, creativity, and intelligence from their data estate because to Azure’s global presence, workload integration, security, compliance, and unique connection with Microsoft.

Read more on Govindhtech.com

#microsoft#azure#azuredatabricks#MicrosoftAzure#MicrosoftFabric#OneLake#DataFactory#lakehouse#ai#technology#technews#news

0 notes

Text

PYHTON DEVELOPMENT EXPERTS

We at Next Big Technology are Pyhton Development Experts, we develop Python websites for your enterprise. We also integrate Python with other technologies.

We are Python Development Experts and provide our clients with state-of-the-art programming solutions in the python language. We, at Nextbigtechnology, design all our solutions based on the specific needs of our clients. All our solutions exhibit python’s prime feature of readability. Our team of experts also makes sure that the solutions provided are implemented by the clients easily in their environments.

#pythondevelopement#pythondevelopers#Python Dynamic Website Development Python Development#-Python Web Application Development using Frameworks Django#Zope#CherryPy#-Python UI Design and Development using Frameworks PyGTK#PyQt#wxPython#-Python Web Crawler Development#-Python Flask Web Development#-Python Desktop Application Development#-Turnkey Windows Services Development#-Python Custom Content Management System Development#-Python and Active Directory Integration Services#-Java and Python/Django Integration Services#-Responsive Web Development with Python#HTML5#and JavaScript#-Python and PHP Integration Services#-Python and ASP .NET Integration Services#-Python and Perl Integration Services#-Python Web Services Development#-Python Migration Services#-Existing Web Application performance monitoring#tuning#and scalability

0 notes

Text

In this guide, we will cover the installation and configuration of LibreNMS on CentOS 7 server with Nginx and optional Letsencrypt SSL certificate for security. What is LibreNMS? LibreNMS is a community-based GPL-licensed auto-discovering network monitoring tool based on PHP, MySQL, and SNMP. LibreNMS includes support for a wide range of network hardware and operating systems including Juniper, Cisco, Linux, Foundry, FreeBSD, Brocade, HP, Windows and many more. It is a fork of “Observium” monitoring tool. Features of LibreNMS Below are the top features of LibreNMS networking monitoring tool Has Automatic discovery – It will automatically discover your entire network using CDP, FDP, LLDP, OSPF, BGP, SNMP, and ARP API Access – LibreNMS provides a full API to manage, graph and retrieve data from your install. Automatic Updates – With LibreNMS you get to stay up to date automatically with new features and bug fixes. Customisable alerting – Highly flexible alerting system, notify via email, IRC, slack and more. Support for Distributed Polling through horizontal scaling which grows with your network Billing system – Easily generate bandwidth bills for ports on your network based on usage or transfer. Android and iOS application – There is a native iPhone/Android App is available which provides core functionality. Multiple authentication methods: MySQL, HTTP, LDAP, Radius, Active Directory Integration support for NfSen, collectd, SmokePing, RANCID, Oxidized Install LibreNMS on CentOS 7 with Let’s Encrypt and Nginx Follow steps provided here to have a running an operation LibreNMS monitoring tool on your CentOS 7 server. Ensure your system is updated and rebooted: sudo yum -y update sudo reboot After reboot set timezone and chronyd: sudo yum -y install chrony sudo timedatectl set-timezone Africa/Nairobi sudo timedatectl set-ntp yes sudo chronyc sources Put SELinux into permissive mode Run the commands below to put SELinux in Permissive mode: sudo setenforce 0 To persist the change, edit SELinux configuration file sudo sed -i 's/^SELINUX=.*/SELINUX=permissive/g' /etc/selinux/config cat /etc/selinux/config | grep SELINUX= Add EPEL repository to the system Enable EPEL repository on your system sudo yum -y install vim epel-release yum-utils Install required dependencies Install all dependencies required to install and run LibreNMS on CentOS 7: sudo yum -y install zip unzip git cronie wget fping net-snmp net-snmp-utils ImageMagick jwhois mtr rrdtool MySQL-python nmap python-memcached python3 python3-pip python3-devel Install PHP and Nginx PHP will be installed from REMI repository, add it to the system like below: sudo yum -y install http://rpms.remirepo.net/enterprise/remi-release-7.rpm Disable remi-php54 repo which is enabled by default, and enable repository for PHP 7.2 sudo yum-config-manager --disable remi-php54 sudo yum-config-manager --enable remi-php74 Then finally install required php modules sudo yum -y install php php-cli,mbstring,process,fpm,mysqlnd,zip,snmp,devel,gd,mcrypt,mbstring,curl,xml,pear,bcmath Configure PHP Edit PHP-FPM configuration file: sudo vim /etc/php-fpm.d/www.conf Set below variables user = nginx group = nginx listen = /var/run/php-fpm/php-fpm.sock listen.owner = nginx listen.group = nginx listen.mode = 0660 Set PHP timezone $ sudo vim /etc/php.ini date.timezone = Africa/Nairobi Install nginx web server Install Nginx web server on CentOS 7: sudo yum -y install nginx Start nginx and php-fpm service for i in nginx php-fpm; do sudo systemctl enable $i sudo systemctl restart $i done Install and Configure Database Server Install MariaDB database on your CentOS 7 server. curl -LsS -O https://downloads.mariadb.com/MariaDB/mariadb_repo_setup sudo bash mariadb_repo_setup sudo yum install MariaDB-server MariaDB-client MariaDB-backup sudo systemctl enable --now mariadb sudo mariadb-secure-installation Edit my.cnf file and add below lines within the [mysqld] section:

$ sudo vim /etc/my.cnf.d/server.cnf [mysqld] innodb_file_per_table=1 lower_case_table_names=0 Restart the MariaDB server after making the changes sudo systemctl restart mariadb Once the database server has been installed and running, login as root user: $ sudo mysql -u root -p Create a database and user: CREATE DATABASE librenms CHARACTER SET utf8 COLLATE utf8_general_ci; GRANT ALL PRIVILEGES ON librenms.* TO 'librenms_user'@'localhost' IDENTIFIED BY "Password1234!"; FLUSH PRIVILEGES; EXIT; Install and Configure LibreNMS on CentOS 7 If you want to use Letsencrypt SSL certificate, you need to first request for it. Run below commands as root user sudo yum -y install certbot python2-certbot-nginx Enable http port on the firewall if you have firewalld service running sudo firewall-cmd --add-service=http,https --permanent sudo firewall-cmd --reload Now obtain the certificate to use export DOMAIN='librenms.example.com' export EMAIL="[email protected]" sudo certbot certonly --standalone -d $DOMAIN --preferred-challenges http --agree-tos -n -m $EMAIL --keep-until-expiring The certificate will be placed under /etc/letsencrypt/live/librenms.example.com/ directory Clone LibreNMS project from Github Add librenms user: sudo useradd librenms -d /opt/librenms -M -r sudo usermod -aG librenms nginx Clone LibreNMS project from Github: cd /opt sudo git clone https://github.com/librenms/librenms.git sudo chown librenms:librenms -R /opt/librenms Install PHP dependencies cd /opt/librenms sudo ./scripts/composer_wrapper.php install --no-dev A successful install should have output similar to below: .... Requirement already satisfied: typing-extensions>=3.6.4; python_version < "3.8" in /root/.local/lib/python3.6/site-packages (from importlib-metadata>=1.0; python_version < "3.8"->redis>=3.0->-r requirements.txt (line 3)) Installing collected packages: psutil, command-runner Running setup.py install for psutil: started Running setup.py install for psutil: finished with status 'done' Successfully installed command-runner-1.3.0 psutil-5.9.0 Copy and configure SNMP configuration template: Run the commands below in the terminal: sudo cp /opt/librenms/snmpd.conf.example /etc/snmp/snmpd.conf sudo vim /etc/snmp/snmpd.conf Set your community string by replacing RANDOMSTRINGGOESHERE com2sec readonly default MyInternalNetwork Download distribution version identifier script sudo curl -o /usr/bin/distro https://raw.githubusercontent.com/librenms/librenms-agent/master/snmp/distro sudo chmod +x /usr/bin/distro Then start and enable snmpd service sudo systemctl enable snmpd sudo systemctl restart snmpd When all is done, create nginx configuration file for LibreNMS Nginx configuration without SSL This is placed under /etc/nginx/conf.d/librenms.conf server listen 80; server_name librenms.example.com; root /opt/librenms/html; index index.php; charset utf-8; gzip on; gzip_types text/css application/javascript text/javascript application/x-javascript image/svg+xml text/plain text/xsd text/xsl text/xml image/x-icon; location / try_files $uri $uri/ /index.php?$query_string; location /api/v0 try_files $uri $uri/ /api_v0.php?$query_string; location ~ \.php include fastcgi.conf; fastcgi_split_path_info ^(.+\.php)(/.+)$; fastcgi_pass unix:/var/run/php-fpm/php-fpm.sock; location ~ /\.ht deny all; Nginx Configuration with SSL server listen 80; server_name librenms.example.com; root /opt/librenms/html; return 301 https://$server_name$request_uri; server listen 443 ssl http2; server_name librenms.example.com;; root /opt/librenms/html; index index.php; # Set Logs path access_log /var/log/nginx/access.log; error_log /var/log/nginx/error.log; # Configure SSL ssl_certificate /etc/letsencrypt/live/librenms.example.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/librenms.example.com/privkey.pem; # Enabling Gzip compression on Nginx charset utf-8; gzip on; gzip_types text/css application/javascript text/javascript application/x-javascript image/svg+xml text/plain text/xsd text/xsl text/xml image/x-icon; location / try_files $uri $uri/ /index.php?$query_string; location /api/v0 try_files $uri $uri/ /api_v0.php?$query_string; # PHP-FPM handle all .php files requests location ~ \.php include fastcgi.conf; fastcgi_split_path_info ^(.+\.php)(/.+)$; fastcgi_pass unix:/var/run/php-fpm/php-fpm.sock; location ~ /\.ht deny all; Confirm nginx syntax: $ sudo nginx -t nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful If all looks good, restart the service sudo systemctl restart nginx Configure cron jobs sudo cp /opt/librenms/librenms.nonroot.cron /etc/cron.d/librenms Copy logrotate config LibreNMS keeps logs in /opt/librenms/logs. Over time these can become large and be rotated out. To rotate out the old logs you can use the provided logrotate config file: sudo cp /opt/librenms/misc/librenms.logrotate /etc/logrotate.d/librenms Set proper permissions sudo chown -R librenms:nginx /opt/librenms sudo chmod -R 775 /opt/librenms sudo setfacl -d -m g::rwx /opt/librenms/logs sudo setfacl -d -m g::rwx /opt/librenms/rrd /opt/librenms/logs /opt/librenms/bootstrap/cache/ /opt/librenms/storage/ sudo setfacl -R -m g::rwx /opt/librenms/rrd /opt/librenms/logs /opt/librenms/bootstrap/cache/ /opt/librenms/storage/ Start LibreNMS Web Installer Open http://librenms.example.com/install.php on your web browser to finish the installation. Confirm that all Pre-Install Checks passes and click “Next Stage” Configure Database credentials as created earlier. It will start to import database schema and populate data. On the next page, you’ll be asked to configure admin user account. Username: admin Password: StrongPassword Next is the generation of the configuration file, you may have to create the file manually with the contents given if it fails to create. The file path should be /opt/librenms/.env. $ sudo vim /opt/librenms/.env # Database connection settings DB_HOST=localhost DB_DATABASE=librenms DB_USERNAME=librenms_user DB_PASSWORD=Password1234! Change ownership of the file to a librenms user: sudo chown librenms:librenms /opt/librenms/.env Click “Finish Install” button to complete LibreNMS installation on CentOS 7 You should be greeted with an admin login page. Login and select Validate Installation We also have other monitoring tutorials around Zabbix, Grafana, Prometheus, and InfluxDB.

0 notes

Text

Bitnami mean stack for ruby on rails

#BITNAMI MEAN STACK FOR RUBY ON RAILS FOR FREE#

#BITNAMI MEAN STACK FOR RUBY ON RAILS INSTALL#

#BITNAMI MEAN STACK FOR RUBY ON RAILS UPGRADE#

#BITNAMI MEAN STACK FOR RUBY ON RAILS FULL#

Invented by David Heinemeier Hanss, Ruby On Rails has been developed as an open-source project, with distributions available through. Bitnami DevPack is a comprehensive web stack with all the major back-end development languages. Dotnik Studio Company is a Dedicated Research, Design, and Development Company for next-gen SaaS startups, businesses, and. It comprises of Python as the programming language, with Windows or Linux as the operating system, Apache as the server, MySQL or MongoDB as the database software. Dotnik Studio is a full-service Digital Product Design and Development Studio delivering delightful brands, products, and user experiences.

#BITNAMI MEAN STACK FOR RUBY ON RAILS FULL#

Rails is also an MVC (model, view, controller) framework where all layers are provided by Rails, as opposed to reliance on other, additional frameworks to achieve full MVC support. Django is the Python stack for web development. The framework also supports MySQL, PostgreSQL, SQLite, SQL Server, DB2 and Oracle. Rails can run on most Web servers that support CGI.

Prototype, an implementer of drag and drop and Ajax functionality.

Any ideas Any Full 'Ruby Stack' AMI on EC2 How do I get ruby to print a full backtrace instead of a truncated one Get current stack trace in Ruby without raising an exception Ruby 2.

Action pack, a manager of controller and view functions Rails 3.0 & Ruby 1.9.2rc: Rake commands return already initialized constant & stack level too deep errors.

Active record, an object-relational mapping layer.

Rails is made up of several components, beyond Ruby itself, including: In a blog posting, Geary predicted that Rails would be widely adopted in the near future. According to David Geary, a Java expert, the Ruby-based framework is five to 10 times faster than comparable Java-based frameworks. Changes made to applications are immediately applied, avoiding the time consuming steps normally associated with the web development cycle. Bitnami Kubernetes Sandbox provides a complete, easy to deploy development environment for containerized apps. The principle difference between Ruby on Rails and other frameworks for development lies in the speed and ease of use that developers working within the environment enjoy. You should also have a new command line shortcut in your start menu that is called Use Bitnami Ruby Stack. Once that is done, you should have a new directory that contains all of the components that Bitnami installed. Ruby on Rails, sometimes known as "RoR" or just "Rails," is an open source framework for Web development in Ruby, an object-oriented programming (OOP) language similar to Perl and Python. First of all, go to the Bitnami Ruby stack page and download and run the local installer. This allows you to have multiple instances of the same stack, without them interfering with each other.īitNami RubyStack greatly simplifies the.

BitNami Stacks can be installed in any directory.

By the time you click the 'finish' button on the installer, the whole stack will be integrated, configured and ready to go.

#BITNAMI MEAN STACK FOR RUBY ON RAILS UPGRADE#

For example, you can upgrade your system's MySQL or Apache without fear of 'breaking' your BitNami Stack.

BitNami Stacks are completely self-contained, and therefore do not interfere with any software already installed on your system.

Our installers completely automate the process of installing and configuring all of the software included in each Stack, so you can have everything up and running in just a few clicks.

#BITNAMI MEAN STACK FOR RUBY ON RAILS INSTALL#

BitNami Stacks are built with one goal in mind: to make it as easy as possible to install open source software.It lets you write beautiful code by favoring convention over configuration. Ruby on Rails is a full-stack MVC framework for database-backed web applications that is optimized for programmer happiness and sustainable productivity.

#BITNAMI MEAN STACK FOR RUBY ON RAILS FOR FREE#

BitNami RubyStack is distributed for free under the Apache 2.0 license. It can be deployed using a native installer, as a virtual machine or in the cloud. It includes ready-to-run versions of Apache, MySQL, Ruby and Rails and required dependencies. Ruby on Rails is a full-stack MVC framework for database-backed web applications that is. BitNami RubyStack greatly simplifies the development and deployment of Ruby on Rails applications. BitNami RubyStack is distributed for free under the Apache 2.0 license.

0 notes

Text

TOP 10 PHP Web Development Company

ArkssTech has come up with a bunch of innovative ideas to build an extraordinary web structure by using the wide range of PHP frameworks and organized methodologies. The interesting fact about our PHP Web Development Company is that we offer a great variety of features among which you can choose the selected features that might go right with your business niche.

Our PHP Web Development Services & Solutions are prepare dafter a deep analysis of the concerned market and its audience base. We ensure that every step in the website development life cycle would be proceeded under the supervision of experts and our valuable clients.

Our team of highly skilled, passionate & dedicated PHP Developers brings us the confidence of delivering creative and result driven PHP Web Development Services with top notch quality.

Ensuring the customer satisfaction & quality at prime, we give full space of creativity and innovation to our developers so that they can keep themselves in touch with latest trends and inventions inHTML5, CSS, jQuery, MySQL& other technologies.

Well, PHP is one of the most commonly used and convenient programming languages giving your website the exceptional & comprehensive features. It nourishes your website with flexibility while executive complex functions.

While joining hands with us, you can get the required tools, resources, skills and expertise in an outstanding PHP Web Application Development at a very affordable pricing.

Why Choose Us As a PHP Development Company For Your Project?

Choose Vega Technologies LLC as your preferred PHP Development Company in USA & get a scalable, dynamic and secured web structure at the cost 40% lesser than the competitors.

Vega Technologies LLC is known for building dynamic, database-driven and high performing web systems. With over (number) years of experience in PHP and MySQL Web Development, we have worked on many projects for (project fields).

Right from leveraging a wide rang of PHP frameworks and databased with the use of agile &custom PHP Web Development methodologies. See how you can be benefited while working with us!

Dedicated PHP Developers

We have a team of (number)outstanding web developers. You can select any developer that you think can help you get your website a magnificent structure with efficient and seamless integration of required technologies.

Proper Research & Business Knowledge

Before our web developer would start working on your project, we’ll have a quick conversation with you to know about your business in details. Thereafter, we’ll prepare a strong approach through which we can process our web development life cycle and complete them within given time.

No Hidden Costing

While picking up Vega Technologies as your PHP Web Development Company, you’re saving up more on the development costs and work fees. Also, we assure you to not charge any hidden costs with any of our clients.

Agile Methodology

To make the development process more measurable, we rely on agile development methodologies. We assure you to get a result-driven & flexible web structure that holds the margin of future changes and that too within your budget.

Strong Skillset

With certified developers in different frameworks like Zend, Laravel, we have a strong skillset for doing most complex projects.

Cost Effective

Our team filled with super talented Project Managers who are masters at estimating right budget, prioritizing tasks, arranging the work flow & delivering complex modules within the project teams, would help you to sit back, stay relax and wait for the best results ahead.

Our PHP Development Services

Being established as PHP Development Company in USA for 6 years, Vega Technologies LLC is a specialist in using Rich Agile Methods for Small Team Software Development.

Here are some of the services that we provide -

1.Custom PHP web application development

2.Custom PHP programming and scripting

3.Custom PHP product development

4.Existing application porting and migration

5.PHP and Flash/Flex Integration

6.PHP and Active Directory Integration Services

7.PHP and Python/Django Integration Services

8.Responsive web development with the PHP, HTML5 and JavaScript

9.Python and ASP .NET Integration Services

10.Migration of existing Perl application to PHP

11.Custom content management system development

12.Custom document management system development

13.Responsive web development using PHP Frameworks

14.PHP consulting services

15.Existing application support and maintenance

16.PHP web application QA testing services

More info:https://arksstech.com/php-web-development-company/

0 notes

Text

Building A Web Scraper

Create A Web Scraper

Web Scraper Google Chrome

Web Scraper Lite

Web Scraper allows you to build Site Maps from different types of selectors. This system makes it possible to tailor data extraction to different site structures. Export data in CSV, XLSX and JSON formats. Build scrapers, scrape sites and export data in CSV format directly from your browser. Use Web Scraper Cloud to export data in CSV, XLSX. It also has a number of built-in extensions for tasks like cookie handling, user-agent spoofing, restricting crawl depth, and others, as well as an API for easily building your own additions. For an introduction to Scrapy, check out the online documentation or one of their many community resources, including an IRC channel, Subreddit, and a. This question is related to link building method i am doing seo for web development company and website has more than 2lac backlinks, majority of backlinks come from social bookmarking, article and press release submission. In this recent penguin 2.0 update, i lost my ranking on majority of keywords. Advanced tactics 1. Customizing web query. Once you create a Web Query, you can customize it to suit your needs. To access Web query properties, right-click on a cell in the query results and choose Edit Query.; When the Web page you’re querying appears, click on the Options button in the upper-right corner of the window to open the dialog box shown in screenshot given below.

What Are Web Scrapers And Benefits Of Using Them?

A Web Scraper is a data scraping tool that quite literally scrapes or collects data off from websites. Microsoft office for mac catalina. It is the process of extracting information and data from a website, transforming the information on a web-page into a structured format for further analysis. Web Scraper is a term for various methods used to extract and collect information from thousands of websites across the Internet. Generally, you can get rid of copy-pasting work by using the data scrapers. Those who use web scraping tools may be looking to collect certain data to sell to other users or to use for promotional purposes on a website. Web Data Scrapers also called Web data extractors, screen scrapers, or Web harvesters. one of the great things about the online web data scrapers is that they give us the ability to not only identify useful and relevant information but allow us to store that information for later use.

Create A Web Scraper

How To Build Your Own Custom Scraper Without Any Skill And Programming Knowledge?

Modern post boxes uk. Sep 02, 2016 This question is related to link building method i am doing seo for web development company and website has more than 2lac backlinks, majority of backlinks come from social bookmarking, article and press release submission. In this recent penguin 2.0 update, i lost my ranking on majority of keywords.

Web Scraper Google Chrome

If you want to build your own scraper for any website and want to do this without coding then you are lucky you found this article. Making your own web scraper or web crawler is surprisingly easy with the help of this data miner. It can also be surprisingly useful. Now, it is possible to make multiple websites scrapers with a single click of a button with Any Site Scraper and you don’t need any programming for this. Any Site Scraper is the best scraper available on the internet to scrape any website or to build a scraper for multiple websites. Now you don’t need to buy multiple web data extractors to collect data from different websites. If you want to build Yellow Pages Data Extractor, Yelp Data Scraper, Facebook data scraper, Ali Baba web scraper, Olx Scraper, and many web scrapers like these websites then it is all possible with AnySite Scraper. Over, If you want to build your own database from a variety of websites or you want to build your own custom scraper then Online Web Scraper is the best option for you.

Build a Large Database Easily With Any site Scraper

In this modern age of technology, All businesses' success depends on big data and the Businesses that don’t rely on data have a meager chance of success in a data-driven world. One of the best sources of data is the data available publicly online on social media sites and business directories websites and to get this data you have to employ the technique called Web Data Scraping or Data Scraping. Building and maintaining a large number of web scrapers is a very a complex project that like any major project requires planning, personnel, tools, budget, and skills.

Web scraping with django python. Web Scraping using Django and Selenium # django # selenium # webscraping. Muhd Rahiman Feb 25 ・13 min read. This is a mini side project to tinker around with Django and Selenium by web scraping FSKTM course timetable from MAYA UM as part of my self-learning prior to FYP. Automated web scraping with Python and Celery is available here. Making a web scraping application with Python, Celery, and Django here. Django web-scraping. Improve this question. Follow asked Apr 7 '19 at 6:14. User9615577 user9615577. Add a comment 2 Answers Active Oldest Votes. An XHR GET request is sent to the url via requests library. The response object html is. If your using django, set up a form with a text input field for the url on your html page. On submission this url will appear in the POST variables if you've set it up correctly.

Jul 10, 2019 The Switch itself (just the handheld screen) includes a slot for a microSD Card and a USB Type-C Connector. The Nintendo Switch Dock includes two USB 2.0 Ports and a TV Output LED in the front. The 'Switch tax' also applies to many games that had been previously released on other platforms ported later to the Switch, where the Switch game price reflects the original price of the game when it was first released rather than its current price. It is estimated that the cost of Switch games is an average of 10% over other formats. Get the detailed specs for the Nintendo Switch console, the Joy-Con controllers, and more. Switch specs. The S5200-ON is a complete family of switches:12-port, 24-port, and 48-port 25GbE/100GbE ToR switches, 96-port 25GbE/100GbE Middle of Row (MoR)/End of Row (EoR) switch, and a 32-port 100GbE Multi-Rate Spine/Leaf switch. From the compact half-rack width S5212F-ON providing an ideal form factor. Switch resistance is referred to the resistance introduced by the switch into the circuit irrespective of its contact state. The resistance will be extremely high (ideally infinite) when the switch is open and a finite very low value (ideally zero) when the switch is closed. 2: Graph Showing Typical Switch Resistance Pattern.

Web Scraper Lite

You will most likely be hiring a few developers who know-how to build scale-able scrapers and setting up some servers and related infrastructure to run these scrapers without interruption and integrating the data you extract into your business process. You can use full-service professionals such as Anysite Scraper to do all this for you or if you feel brave enough, you can tackle this yourself.

Apr 15, 2021 Currently, you cannot stream Disney Plus on Switch, however, there is a piece of good news for Nintendo Switch users. It seems that Disney lately announced that Disney Plus streaming service will be accessible on Nintendo’s handheld console. The news originates from a presentation slide revealing the new streaming service available on consoles. Disney plus on switch. Mar 15, 2021 Though mentioned earlier, Disney Plus is available on few gaming consoles. Unfortunately, it is not available on Nintendo Switch as of now. Chances are there to bring it in on the future updates as Nintendo Switch is getting popular day by day. Despite the own store by Nintendo switch, it has only a few apps present. No, there is no Disney Plus app on the Nintendo Switch. If you want to stream movies or shows on your Switch, you can instead download the Hulu or YouTube apps. Visit Business Insider's homepage. Nov 30, 2020 Disney Plus is not available on the handheld console. The Switch only offers YouTube and Hulu as of now, not even Netflix. This tells us that the Nintendo Switch is indeed capable of hosting a.

0 notes

Photo

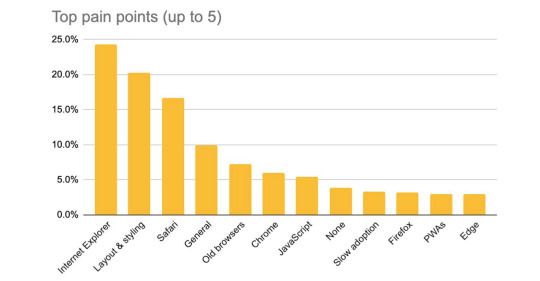

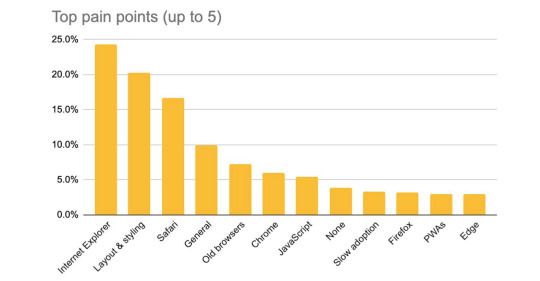

What are the web's pain points?

#459 — September 23, 2020

Web Version

Frontend Focus

PDF: The MDN Browser Compatibility Report — This detailed write-up dives into what the current pain points are when it comes to browser support, testing, and compatibility, and what can done to improve things (no surprise to see having to support IE 11 on top here).

Mozilla

Accessible Web Animation: The WCAG on Animation Explained — Based on the recommendations of the Web Content Accessibility Guidelines, Val Head outlines both the strategic and tactical things we can do to create accessible animated content and interactions.

CSS-Tricks

Virtual Square UnBoxed 2020 — Learn how Square developers and partners are helping today’s business owners adapt. See how the Square platform is being used for stability and survival, and get inspired by innovative solutions to this year’s unprecedented challenges.

Square sponsor

Finding The Root Cause of a CSS Bug — Highlights the importance of finding the source of a bug, with plenty of examples and how to solve them from the ground up.

Ahmad Shadeed

Vital Web Performance — “with so many asynchronous patterns in use today, how do we even define what ‘slow’ is”... Well, various new ‘Web Vitals’ metrics are meant to define just that. Here’s an explainer, covering the various implementations and requirements for each.

Todd Gardner

⚡️ Quick bits:

Cloudflare is now working with the Internet Archive to automatically archive certain content to the Wayback Machine.

Firefox is currently working on a tabbing order accessibility feature. Feedback wanted.

Microsoft's Erica Draud runs through the latest developer tool improvements you can expect in Edge.

Version 81 of Firefox landed yesterday, not much to speak of in way of notable developer changes. But the consumer-facing release notes include details on new Credit Card saving and PDF filling features.

Working with PWAs? This Service Worker Detector browser extension for Safari may prove handy.

The Microsoft Edge team have introduced new APIs for dual screen and foldable devices.

Version 10 of the Tor Browser is out now.

Catchpoint have acquired performance monitoring tool Webpagetest.

💻 Jobs

Stream Provides APIs for Building Activity Feeds and Chat — Stream is looking for a highly motivated Django/Python developer to seamlessly connect various API-driven platforms with the Stream ecosystem.

Stream

Find a Job Through Vettery — Create a profile on Vettery to connect with hiring managers at startups and Fortune 500 companies. It's free for job-seekers.

Vettery

➡️ Looking to share your job listing in Frontend Focus? More info here.

📙 Tutorials, Articles & Opinion